To address budget constraints and explore innovative applications of AI in usability testing—moving beyond current uses like form creation or summary generation—I implemented an innovative AI role-playing method using Gemini.

Project overview

Scope:

AI UX Research, Product Design

Tools:

Figma, Fig Jam, Figma Make, Gemini

Goal:

To move beyond basic AI tasks (like summarization) and use AI agents to validate low-fidelity wireframes when human testing is resource-constrained.

Key Findings:

AI role-playing is an effective accelerator for identifying design flaws early, and prompt engineering (e.g., one interview at a time) is critical for accurate simulation.

Project Background

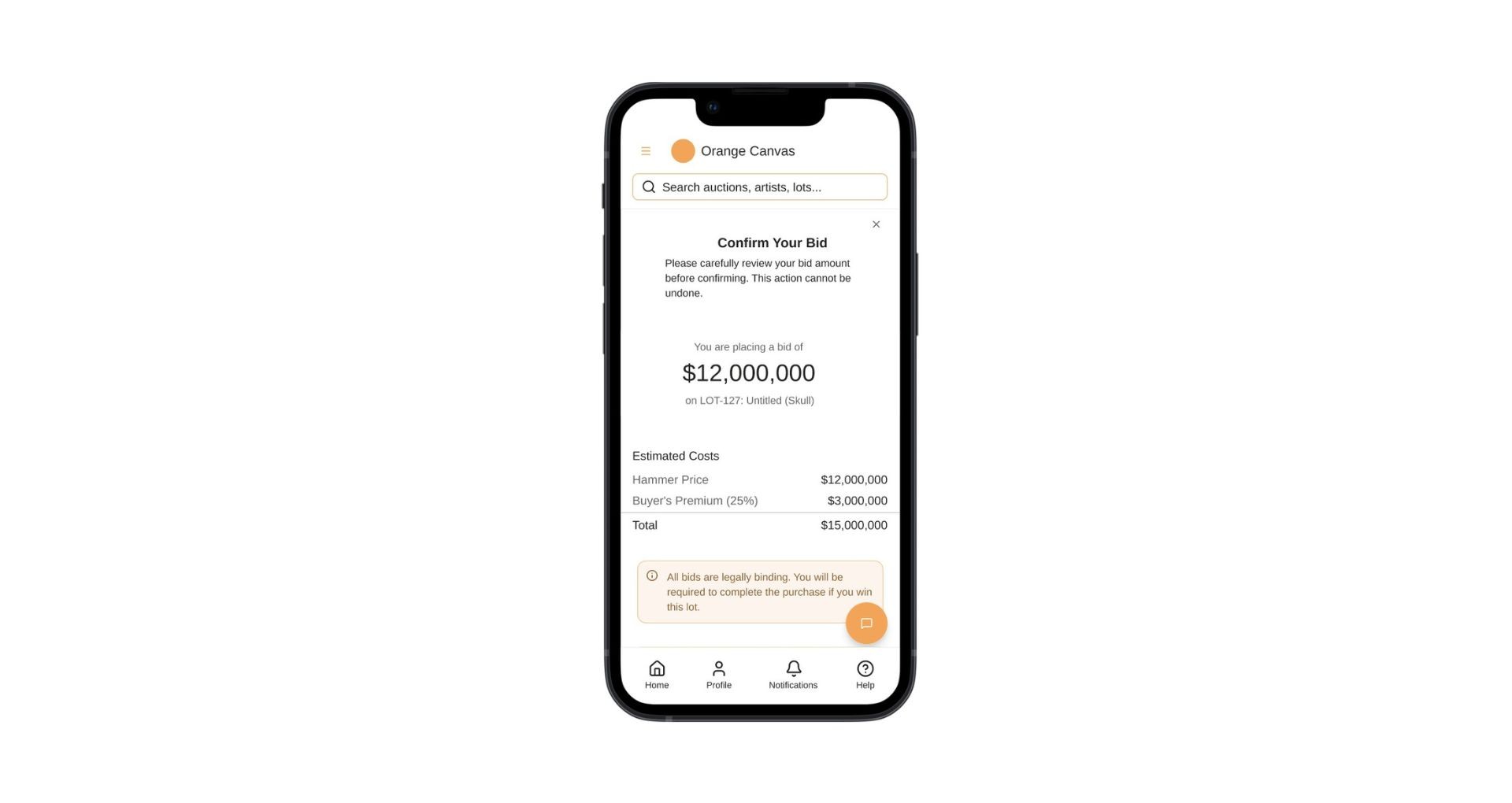

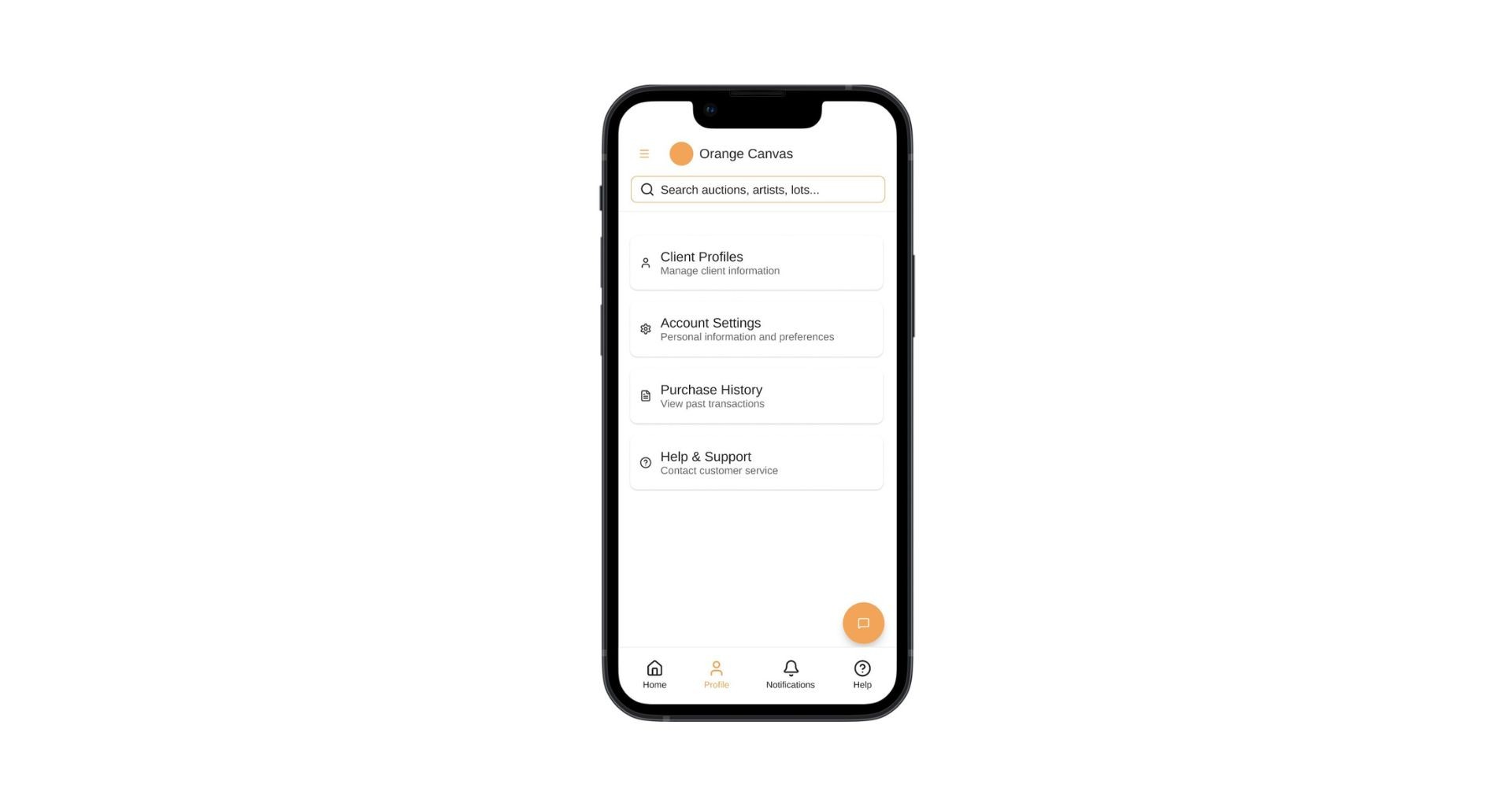

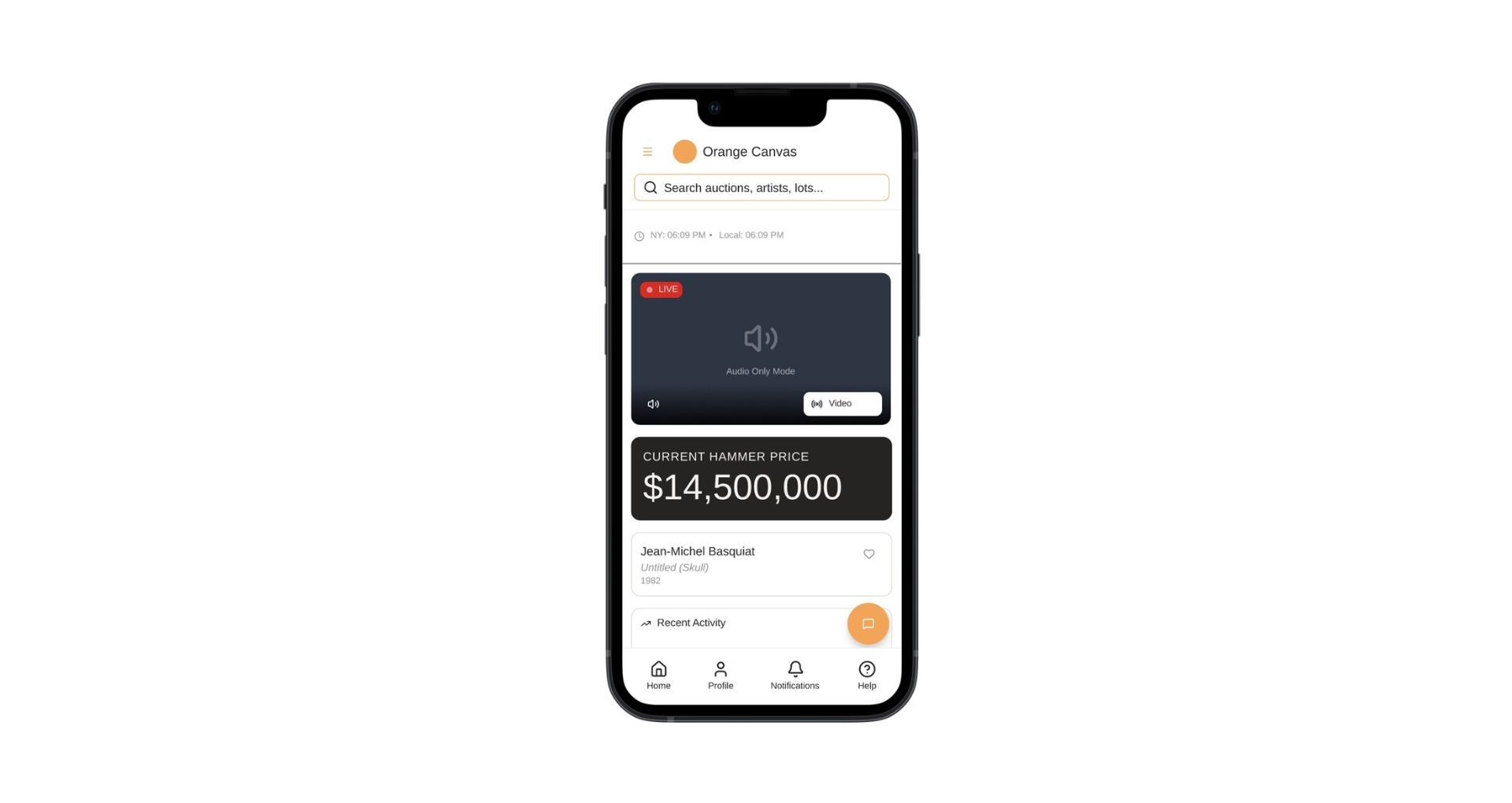

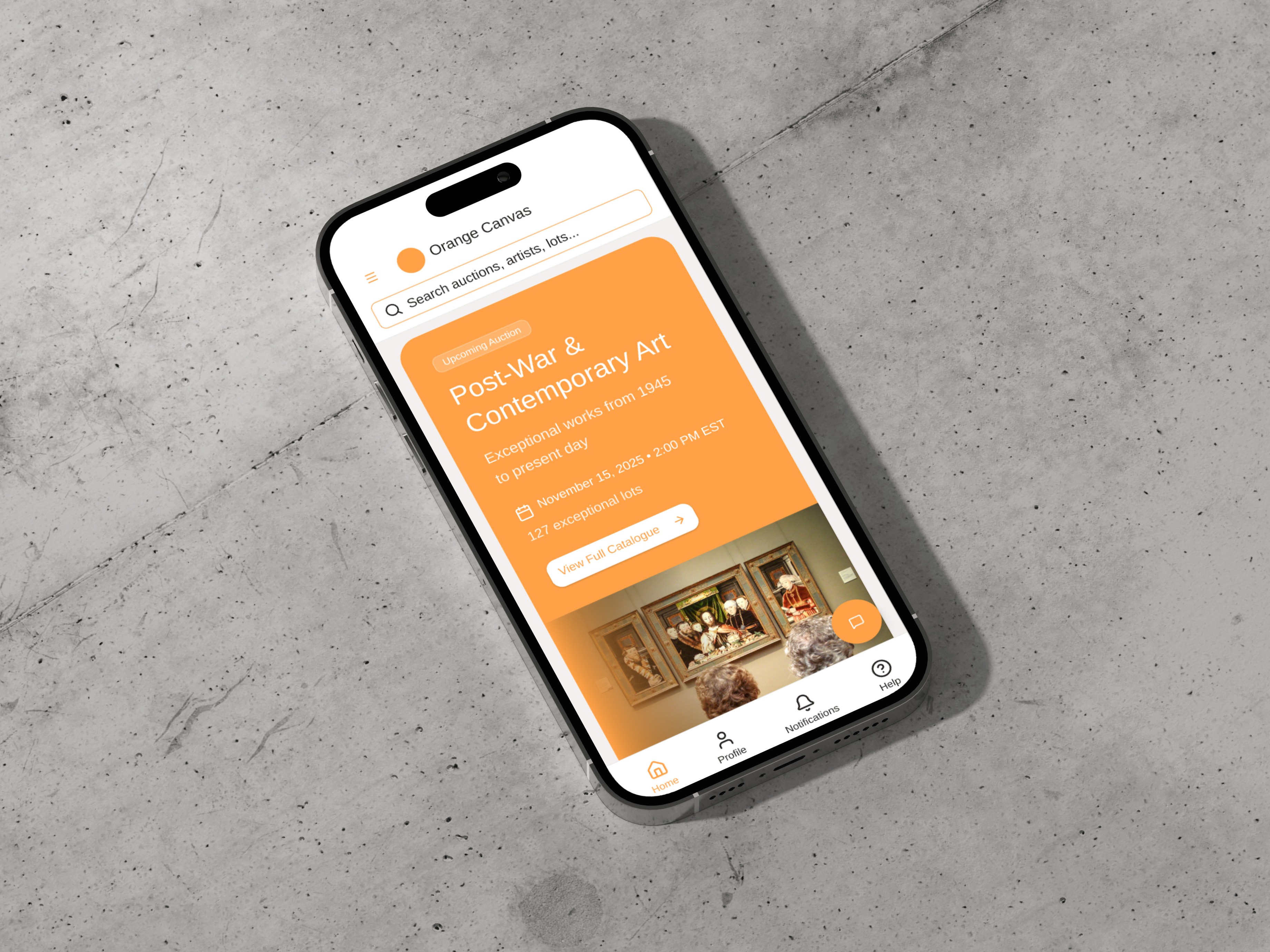

Orange Canvas is the exclusive art gallery commissioning this application to provide its clients with a real-time, mobile auction platform. This report details the Usability Testing conducted to validate the low-fidelity wireframes, building upon the foundational research (personas, value proposition) detailed in the full case study.

I utilized an AI-augmented methodology for rapid analysis of user task completion and feedback. Here, you will read about the testing goals, methodology, key findings, and recommended design iterations for the Orange Canvas Auction App.

Methodology Overview

Goal

Explore the potential of AI role-playing for usability testing given budget constraints.

AI Tool

Google Gemini (for role-playing and prioritization) and Figma AI (for categorization).

Protocol

Simulated testing sessions using AI personas generated from a pre-screen form, with tasks based on wireframe PDFs.

Synthesis

Created an affinity diagram in Figma Jam, categorized and summarized by Figma AI.

Prioritization

Used Gemini to prioritize the synthesized feature list, replacing standard quantitative metrics (like SUS or failure rate).

Research Plan

Crucially, this AI-based approach was executed within the framework of a formal Research Plan, and I diligently followed all steps that were feasible in an environment without human participants.

The Role-Playing Process

The experiment's core involved simulating user testing sessions with Gemini. I first leveraged Gemini to generate highly relevant user personas based on my pre-screen form.

Next, I provided the AI with my list of interview questions (basic warm-up questions and specific tasks) and the PDF of my wireframes for context.

Digital Wireframe

Crucially, I had to set the tone by instructing the AI, similar to a human participant, that there were no wrong or right answers, and its feedback is valuable for identifying design problems.

I treated each AI-generated interview as a real session, diligently filling out my note-taking spreadsheet and synthesizing the information.

Insights from AI Simulation

Instead of focusing on traditional human observation, I noted where the AI user failed or deviated and hypothesized the reason, informing future experiments.

This method proved to be an effective unmoderated paper prototype for identifying structural gaps:

Gap Identification

The AI, unfamiliar with failing an in-app task, would often "explain" or "guess" non-existent steps needed to accomplish a task.

These imaginary steps and suggestions were highly valuable for revealing missing features, illogical transitions, and gaps in the current design.

SUS Ineffectiveness

I initially attempted to use the System Usability Scale (SUS), but quickly found it to be ineffective and without value for this method.

The nature of the AI's "role-played" flow and the issues with wireframe gaps meant the SUS metric did not accurately reflect the design's true usability, leading me to remove it completely from the process.

Prompt Optimization

The experiment yielded critical rules for prompt design:

It is essential to ask for one user interview at a time to prevent Gemini from cross-improving answers or becoming confused.

Clear, step-by-step task instructions were crucial, as lengthy, multi-task questions led the AI to jump ahead and ignore intermediate steps.

Reflections and Next Steps

This confirms that AI role-playing is not a replacement for human user research but an extremely useful accelerator and supplement during low-fidelity phases with limited resources.

New Research Focus: The findings prompt new research specifically focused on improving accessibility in design and the readability of design for rising AI agents.

AI as a "User": As AI becomes increasingly integrated into daily life, the user experience must consider how these agents interact with and interpret applications.

Dual Optimization: This involves developing testing methodologies that acknowledge AI as a growing class of "user," ensuring designs are optimized for both human experience and machine interpretability.

Reach Out with Your Questions!

If you have any questions, please feel free to contact me.

Back to Top